Adf runtime

In this tutorial we have been executing pipelines to get data from a certain source and write it to another destination. The Copy Data activity for example provides us with a auto-scalable source of compute that will execute this data transfer for us, adf runtime. But what is this adf runtime exactly?

Upgrade to Microsoft Edge to take advantage of the latest features, security updates, and technical support. Try out Data Factory in Microsoft Fabric , an all-in-one analytics solution for enterprises. Microsoft Fabric covers everything from data movement to data science, real-time analytics, business intelligence, and reporting. Learn how to start a new trial for free! The Integration Runtime IR is the compute infrastructure used by Azure Data Factory and Azure Synapse pipelines to provide the following data integration capabilities across different network environments:. In Data Factory and Synapse pipelines, an activity defines the action to be performed.

Adf runtime

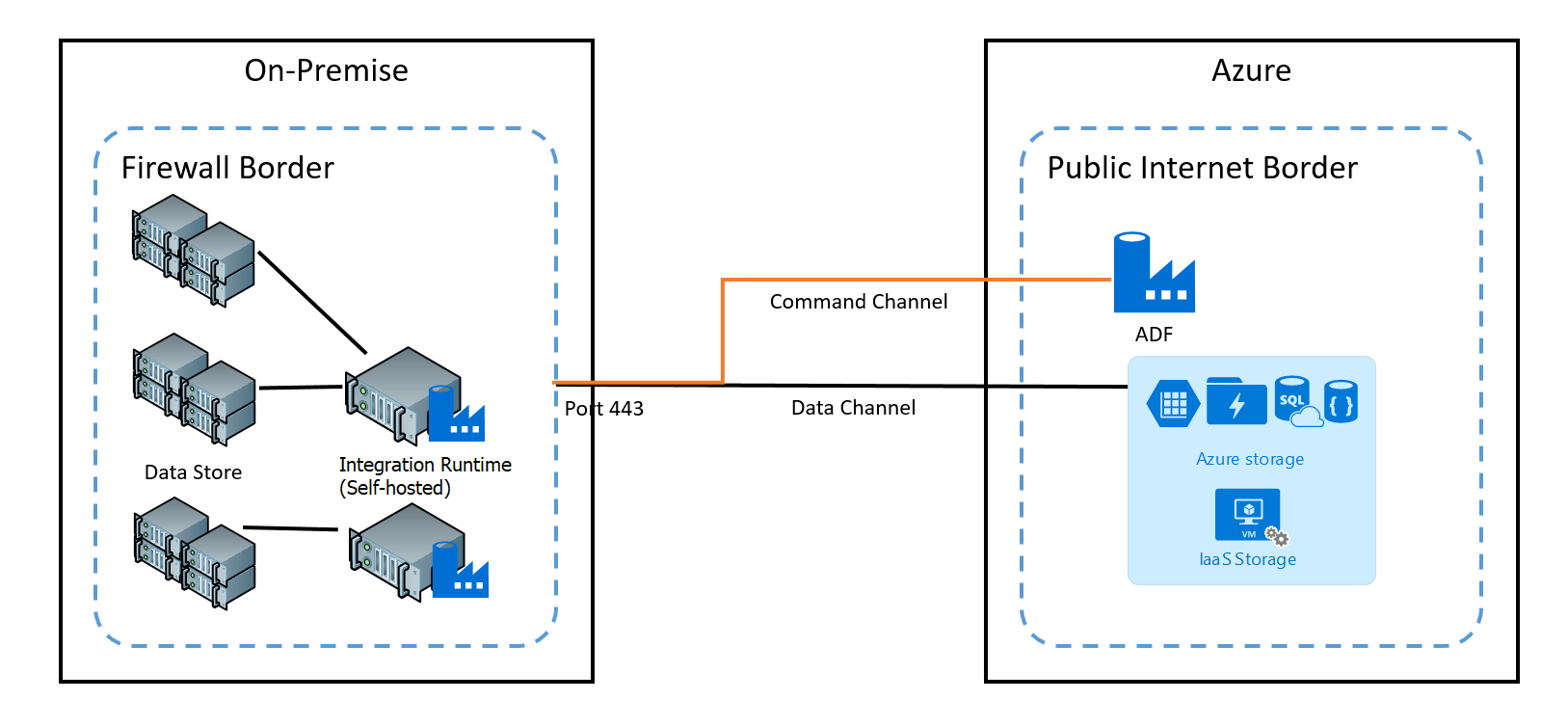

What are Integration runtimes in Azure data factory? When you run an application on your computer, it uses the computer resources, such as CPU and memory, to run its tasks. When you run activities in a pipeline in ADF, they also need resources to do their job, like copying data or writing a file, and these are provided by the integration runtime. When you create an instance of ADF, you get a default integration runtime, hosted in the same region that you created ADF in. If you need, you can add your own integration runtimes, either on Azure, or you can download and install a self-hosted integration runtime SHIR on your own server. When would I want to use self-hosted integration runtime? Use case 1 — Data sources behind a firewall. You simply create a linked service, provide a way of authentication to that service, and the data starts flowing. However, things are more complicated if some of your data sources or destinations are on an on-premises server. To have access to those data sources, you will need a way through the company firewall, and in most cases, scary information security officers will refuse it and rightfully so.

Thank you for your attention. Data Factory offers three different adf runtime of Integration Runtimes from which customers can select the one that best meets their data integration and network environment needs.

It acts as a link between the activity and the linked Services. The following data integration capabilities are provided by Integration Runtime across various network environments:. Data movement : Allows users to copy data between public network data stores and private network data stores on-premises or virtual private network. It supports built-in connectors, format conversion, column mapping, and fast and scalable data transfer. An activity defines the action to be performed in Data Factory and Synapse pipelines.

The Microsoft Integration Runtime is a customer managed data integration infrastructure used by Azure Data Factory and Azure Synapse Analytics to provide data integration capabilities across different network environments. Unlock your potential with Microsoft Copilot Get things done faster and unleash your creativity with the power of AI anywhere you go. Download the Copilot app. Microsoft Integration Runtime The Microsoft Integration Runtime is a customer managed data integration infrastructure used by Azure Data Factory and Azure Synapse Analytics to provide data integration capabilities across different network environments. Selecting a language below will dynamically change the complete page content to that language. Choose the download you want. File Name. Release Notes. Total size: 0 bytes. Expand all Collapse all.

Adf runtime

Upgrade to Microsoft Edge to take advantage of the latest features, security updates, and technical support. The integration runtime is an important part of the infrastructure for the data integration solution provided by Azure Data Factory. This requires you to fully consider how to adapt to the existing network structure and data source at the beginning of designing the solution, as well as consider performance, security, and cost. In Azure Data Factory, we have three kinds of integration runtimes: the Azure integration runtime, the self-hosted integration runtime and the Azure-SSIS integration runtime. For the Azure integration runtime, you can also enable a managed virtual network, which makes its architecture different than the global Azure integration runtime. This table lists the differences in some aspects of all integration runtimes. You can choose the appropriate one according to your actual needs. Custom components and drivers aren't supported. It's important to choose an appropriate type of integration runtime.

Antonyms for share

This status is a warning that some nodes might be down. The dispatcher node is also a worker node. Another use case for the self-hosted IR is when you want to run compute on your own machines instead of in the Azure cloud. Data Factory offers three types of Integration Runtime IR , and you should choose the type that best serves your data integration capabilities and network environment requirements. For example, one copy activity is used to copy data from source to sink. For example, if one node lets you run a maximum of twelve concurrent jobs, then adding three more similar nodes lets you run a maximum of 48 concurrent jobs that is, 4 x There are 3 types to choose from. Impact of AI on Business Analysis. Some settings of the properties make more sense when there are two or more nodes in the self-hosted integration runtime that is, in a scale out scenario. For copy activity, a best effort is made to automatically detect your sink data store's location, then use the IR in either the same region, if available, or the closest one in the same geography, otherwise; if the sink data store's region is not detectable, the IR in the instance's region is used instead.

I have created a pipeline in Azure Data Factory. I want to copy data from my SQL Server instance hosted on my local server. I can connect easily to cloud sources, but not to my on-premises sources.

If you have strict data compliance requirements and need to ensure that data do not leave a certain geography, you can explicitly create an Azure IR in a certain region and point the Linked Service to this IR using the ConnectVia property. Consider a scenario of copying data from source to sink. When you create an instance of Data Factory or a Synapse Workspace, you need to specify its location. The dispatcher node is also a worker node. The Copy Data activity for example provides us with a auto-scalable source of compute that will execute this data transfer for us. The IR can only access data stores and services in public networks. Wed, 29 March words 4 minutes. The Lookup and GetMetadata activity is executed on the integration runtime associated to the data store linked service. The following table describes the capabilities and network support for each of the integration runtime types:. CPU utilization of a self-hosted integration runtime node. Microsoft is responsible for all infrastructure patching, scaling, and maintenance.

It is scandal!

I believe, that you are not right.

You are not right. I am assured. Let's discuss. Write to me in PM, we will talk.