Drop duplicates pyspark

PySpark is a tool designed by the Apache spark community to process data in real time and analyse the results in a local python environment. Spark data frames are different from other data drop duplicates pyspark as it distributes the information and follows a schema. Spark can handle stream processing as well as batch processing and this is the reason for their popularity, drop duplicates pyspark.

There are three common ways to drop duplicate rows from a PySpark DataFrame:. The following examples show how to use each method in practice with the following PySpark DataFrame:. We can use the following syntax to drop rows that have duplicate values across all columns in the DataFrame:. We can use the following syntax to drop rows that have duplicate values across the team and position columns in the DataFrame:. Notice that the resulting DataFrame has no rows with duplicate values across both the team and position columns. We can use the following syntax to drop rows that have duplicate values in the team column of the DataFrame:.

Drop duplicates pyspark

What is the difference between PySpark distinct vs dropDuplicates methods? Both these methods are used to drop duplicate rows from the DataFrame and return DataFrame with unique values. The main difference is distinct performs on all columns whereas dropDuplicates is used on selected columns. The main difference between distinct vs dropDuplicates functions in PySpark are the former is used to select distinct rows from all columns of the DataFrame and the latter is used select distinct on selected columns. Following is the syntax on PySpark distinct. Returns a new DataFrame containing the distinct rows in this DataFrame. It returns a new DataFrame with duplicate rows removed, when columns are used as arguments, it only considers the selected columns. Following is a complete example of demonstrating the difference between distinct vs dropDuplicates functions. In this article, you have learned what is the difference between PySpark distinct and dropDuplicate functions, both these functions are from DataFrame class and return a DataFrame after eliminating duplicate rows. Save my name, email, and website in this browser for the next time I comment. Naveen journey in the field of data engineering has been a continuous learning, innovation, and a strong commitment to data integrity. In this blog, he shares his experiences with the data as he come across.

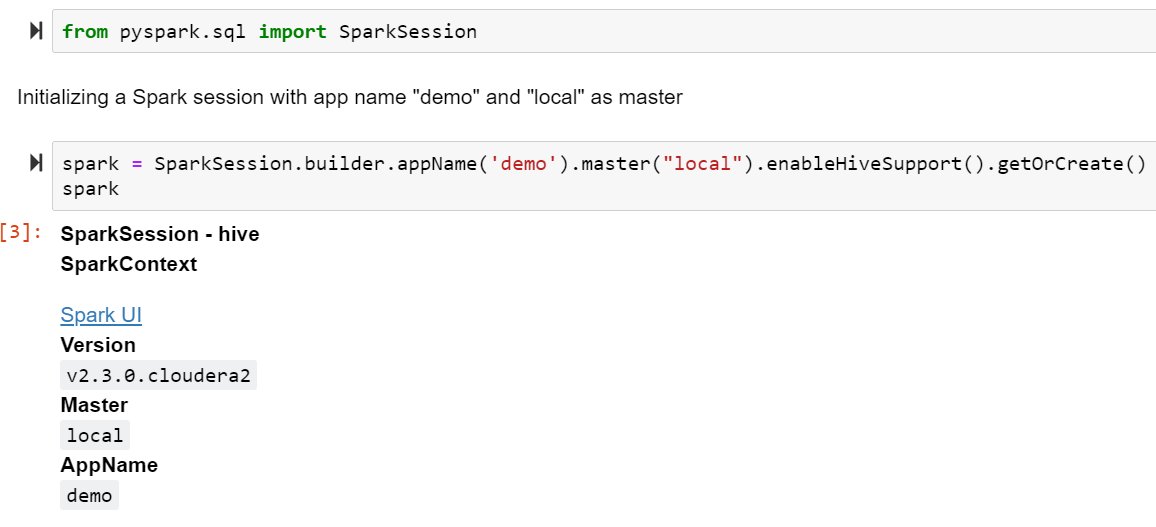

We will create a PySpark data frame consisting of information related to different car racers.

Project Library. Project Path. In PySpark , the distinct function is widely used to drop or remove the duplicate rows or all columns from the DataFrame. The dropDuplicates function is widely used to drop the rows based on the selected one or multiple columns. RDD Transformations are also defined as lazy operations that are none of the transformations get executed until an action is called from the user. Learn to Transform your data pipeline with Azure Data Factory! This recipe explains what are distinct and dropDuplicates functions and explains their usage in PySpark.

There are three common ways to drop duplicate rows from a PySpark DataFrame:. The following examples show how to use each method in practice with the following PySpark DataFrame:. We can use the following syntax to drop rows that have duplicate values across all columns in the DataFrame:. We can use the following syntax to drop rows that have duplicate values across the team and position columns in the DataFrame:. Notice that the resulting DataFrame has no rows with duplicate values across both the team and position columns. We can use the following syntax to drop rows that have duplicate values in the team column of the DataFrame:. Notice that the resulting DataFrame has no rows with duplicate values in the team column.

Drop duplicates pyspark

In this article, you will learn how to use distinct and dropDuplicates functions with PySpark example. We use this DataFrame to demonstrate how to get distinct multiple columns. In the above table, record with employer name James has duplicate rows, As you notice we have 2 rows that have duplicate values on all columns and we have 4 rows that have duplicate values on department and salary columns.

1 gallon camelbak

Campus Experiences. Float64Index pyspark. Alternatively, you can also run dropDuplicates function which returns a new DataFrame after removing duplicate rows. View all posts by Zach. A PySpark data frame requires a session in order to generate an entry point and it performs on-system processing of the data RAM. Learning Paths. Please go through our recently updated Improvement Guidelines before submitting any improvements. UnknownException pyspark. What kind of Experience do you want to share? BarrierTaskContext pyspark. ParseException pyspark. Please Login to comment InheritableThread pyspark. QueryExecutionException pyspark. Hive Practice Example - Explore hive usage efficiently for data transformation and processing in this big data project using Azure VM.

In this tutorial, we will look at how to drop duplicate rows from a Pyspark dataframe with the help of some examples. You can use the Pyspark dropDuplicates function to drop duplicate rows from a Pyspark dataframe.

We use this DataFrame to demonstrate how to get distinct multiple columns. Index pyspark. We can use the following syntax to drop rows that have duplicate values across all columns in the DataFrame:. Follow Naveen LinkedIn and Medium. DataFrameWriter pyspark. Drop duplicate rows in PySpark DataFrame. Vote for difficulty :. The distinct function on DataFrame returns the new DataFrame after removing the duplicate records. View all posts by Zach. Posted on October 10, by Zach. Keep rocking. Parameters subset column label or sequence of labels, optional Only consider certain columns for identifying duplicates, by default use all the columns. How to drop multiple column names given in a list from PySpark DataFrame? Thanks Sneha.

Rather useful idea