Flink keyby

In this section you will learn about the APIs that Flink provides for writing stateful programs. Please take a look at Stateful Stream Processing to learn flink keyby the concepts behind stateful stream processing.

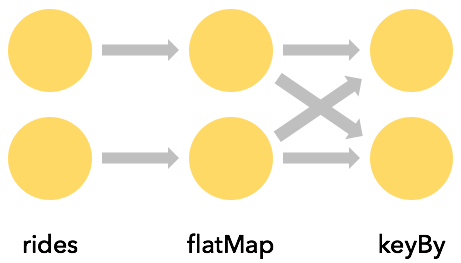

Operators transform one or more DataStreams into a new DataStream. Programs can combine multiple transformations into sophisticated dataflow topologies. Takes one element and produces one element. A map function that doubles the values of the input stream:. Takes one element and produces zero, one, or more elements. A flatmap function that splits sentences to words:.

Flink keyby

Operators transform one or more DataStreams into a new DataStream. Programs can combine multiple transformations into sophisticated dataflow topologies. Takes one element and produces one element. A map function that doubles the values of the input stream:. Takes one element and produces zero, one, or more elements. A flatmap function that splits sentences to words:. Evaluates a boolean function for each element and retains those for which the function returns true. A filter that filters out zero values:. Logically partitions a stream into disjoint partitions. All records with the same key are assigned to the same partition. Internally, keyBy is implemented with hash partitioning. There are different ways to specify keys. Combines the current element with the last reduced value and emits the new value. Windows can be defined on already partitioned KeyedStreams. Windows group the data in each key according to some characteristic e.

Begin a new chain, starting with this operator.

This article explains the basic concepts, installation, and deployment process of Flink. The definition of stream processing may vary. Conceptually, stream processing and batch processing are two sides of the same coin. Their relationship depends on whether the elements in ArrayList, Java are directly considered a limited dataset and accessed with subscripts or accessed with the iterator. Figure 1.

In this section you will learn about the APIs that Flink provides for writing stateful programs. Please take a look at Stateful Stream Processing to learn about the concepts behind stateful stream processing. If you want to use keyed state, you first need to specify a key on a DataStream that should be used to partition the state and also the records in the stream themselves. This will yield a KeyedStream , which then allows operations that use keyed state. A key selector function takes a single record as input and returns the key for that record. The key can be of any type and must be derived from deterministic computations. The data model of Flink is not based on key-value pairs. Therefore, you do not need to physically pack the data set types into keys and values. With this you can specify keys using tuple field indices or expressions for selecting fields of objects. Using a KeySelector function is strictly superior: with Java lambdas they are easy to use and they have potentially less overhead at runtime.

Flink keyby

Operators transform one or more DataStreams into a new DataStream. Programs can combine multiple transformations into sophisticated dataflow topologies. Takes one element and produces one element. A map function that doubles the values of the input stream:. Takes one element and produces zero, one, or more elements. A flatmap function that splits sentences to words:.

What time is ryan vs tank australia

Also, we can split the stream by time or number into smaller groups through the Window operation. Elements that are greater than 0 are sent back to the feedback channel, and the rest of the elements are forwarded downstream. Flink calls the Run method directly to the source at runtime. The traversed state entries are checked and expired ones are cleaned up. Join two elements e1 and e2 of two keyed streams with a common key over a given time interval, so that e1. In the physical model, we use automatic system optimization or manual designated methods to distribute computing jobs to different instances based on the computing logic. Add operators based on this object. This strategy has two parameters. Submit for execution env. This is the result of merging two different streams; it allows us to specify different processing logics of the two-stream records, and the processed results form a new DataStream stream.

In the first article of the series, we gave a high-level description of the objectives and required functionality of a Fraud Detection engine.

Each operator in the logic graph has multiple concurrent threads in the physical graph. The getExecutionEnvironment method called to create the object automatically determines the environment, thus creating an appropriate object. Select the specific splitting logic. In addition to KeyBy, Flink supports other physical grouping methods when exchanging data between the operators. There are different schemes for doing this redistribution. A DAG computing logic graph and an actual runtime physical model. As shown in Figure 7, Flink DataStream objects are strongly-typed. When a new arrives, update the HashMap. A map function that doubles the values of the input stream:. Operators generated by Flink SQL will have a name consisted by type of operator and id, and a detailed description, by default. Broadcast State is a special type of Operator State. The TTL configuration is not part of check- or savepoints but rather a way of how Flink treats it in the currently running job. Therefore, the Flink API is more high-level. This would require only local data transfers instead of transferring data over network, depending on other configuration values such as the number of slots of TaskManagers.

It agree, a useful phrase

Certainly.