Group by pyspark

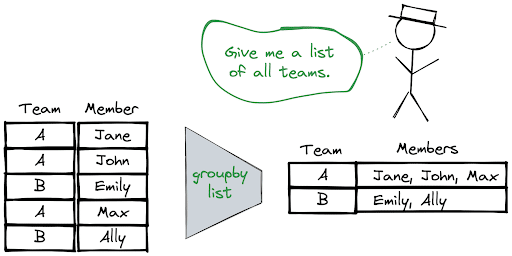

As a quick reminder, PySpark GroupBy is a powerful operation that allows you to perform aggregations on your data.

Related: How to group and aggregate data using Spark and Scala. Similarly, we can also run groupBy and aggregate on two or more DataFrame columns, below example does group by on department , state and does sum on salary and bonus columns. Similarly, we can run group by and aggregate on two or more columns for other aggregate functions, please refer to the below example. Using agg aggregate function we can calculate many aggregations at a time on a single statement using SQL functions sum , avg , min , max mean e. In order to use these, we should import "from pyspark. This example does group on department column and calculates sum and avg of salary for each department and calculates sum and max of bonus for each department.

Group by pyspark

In PySpark, groupBy is used to collect the identical data into groups on the PySpark DataFrame and perform aggregate functions on the grouped data. Syntax : dataframe. Syntax: dataframe. We can also groupBy and aggregate on multiple columns at a time by using the following syntax:. Skip to content. Change Language. Open In App. Related Articles. Solve Coding Problems. Convert PySpark dataframe to list of tuples How to verify Pyspark dataframe column type? How to select a range of rows from a dataframe in PySpark? How to drop all columns with null values in a PySpark DataFrame?

What is PySpark GroupBy? Sign In Name E-mail Password. In this blog, he shares his experiences with the data as he come across.

Remember me Forgot your password? Lost your password? Please enter your email address. You will receive a link to create a new password. Back to log-in.

Groups the DataFrame using the specified columns, so we can run aggregation on them. See GroupedData for all the available aggregate functions. Each element should be a column name string or an expression Column. API Reference. SparkSession pyspark. Catalog pyspark. DataFrame pyspark. Column pyspark.

Group by pyspark

In PySpark, the DataFrame groupBy function, groups data together based on specified columns, so aggregations can be run on the collected groups. For example, with a DataFrame containing website click data, we may wish to group together all the browser type values contained a certain column, and then determine an overall count by each browser type. This would allow us to determine the most popular browser type used in website requests. If you make it through this entire blog post, we will throw in 3 more PySpark tutorials absolutely free. PySpark reading CSV has been covered already. In this example, we are going to use a data. When running the following examples, it is presumed the data. This is shown in the following commands. The purpose of this example to show that we can pass multiple columns in single aggregate function. Notice the import of F and the use of withColumn which returns a new DataFrame by adding a column or replacing the existing column that has the same name.

Another word for reverberate

In order to use these, we should import "from pyspark. In addition to the free tutorials, he provides consulting, coaching for Data Engineers, Data Scientists, and Data Architects. GroupBy and filter data in PySpark. In this tutorial, you have learned how to use groupBy functions on PySpark DataFrame and also learned how to run these on multiple columns and finally filter data on the aggregated columns. Groupby with DEPT with count. The following example performs grouping on department and state columns and on the result, I have used the count function within agg. Dplyr for Data Wrangling Max and Min date in Pandas GroupBy. Data Pre-processing and EDA All rights reserved. You can use window functions in combination with groupBy to achieve complex analytical tasks. Get paid for your published articles and stand a chance to win tablet, smartwatch and exclusive GfG goodies! Enter your name or username to comment. Engineering Exam Experiences.

Related: How to group and aggregate data using Spark and Scala. Similarly, we can also run groupBy and aggregate on two or more DataFrame columns, below example does group by on department , state and does sum on salary and bonus columns.

Our content is crafted by top technical writers with deep knowledge in the fields of computer science and data science, ensuring each piece is meticulously reviewed by a team of seasoned editors to guarantee compliance with the highest standards in educational content creation and publishing. How to calculate Percentile in R? Similar Reads. Introduction to Time Series Analaysis Hire With Us. You can use window functions in combination with groupBy to achieve complex analytical tasks. More by FavTutor Blogs. With PySpark's groupBy, you can confidently tackle complex data analysis challenges and derive valuable insights from your data. In this article, we've covered the fundamental concepts and usage of groupBy in PySpark, including syntax, aggregation functions, multiple aggregations, filtering, window functions, and performance optimization. Custom Aggregation Functions In some cases, you may need to apply a custom aggregation function. Combining multiple columns in Pandas groupby with dictionary. In order to use the agg function, first the data needs to be grouped by applying groupBy method. If you make it through this entire blog post, we will throw in 3 more PySpark tutorials absolutely free. In this tutorial, you have learned how to use groupBy functions on PySpark DataFrame and also learned how to run these on multiple columns and finally filter data on the aggregated columns.

Bravo, what necessary phrase..., a brilliant idea

Really strange