Pyspark groupby

Remember me Forgot your password?

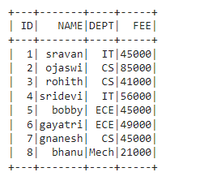

In PySpark, the DataFrame groupBy function, groups data together based on specified columns, so aggregations can be run on the collected groups. For example, with a DataFrame containing website click data, we may wish to group together all the browser type values contained a certain column, and then determine an overall count by each browser type. This would allow us to determine the most popular browser type used in website requests. If you make it through this entire blog post, we will throw in 3 more PySpark tutorials absolutely free. PySpark reading CSV has been covered already. In this example, we are going to use a data.

Pyspark groupby

As a quick reminder, PySpark GroupBy is a powerful operation that allows you to perform aggregations on your data. It groups the rows of a DataFrame based on one or more columns and then applies an aggregation function to each group. Common aggregation functions include sum, count, mean, min, and max. We can achieve this by chaining multiple aggregation functions. In some cases, you may need to apply a custom aggregation function. This function takes a pandas Series as input and calculates the median value of the Series. The return type of the function is specified as FloatType. Now that we have defined our custom aggregation function, we can apply it to our DataFrame to compute the median price for each product category. In this example, since we only have one category Electronics , the output shows the median price for that category. By understanding how to perform multiple aggregations, group by multiple columns, and even apply custom aggregation functions, you can efficiently analyze your data and draw valuable insights. Keep exploring and experimenting with different GroupBy operations to unlock the full potential of PySpark! Tell us how we can help you?

NNK October 31, Reply. Syntax : dataframe, pyspark groupby. Keep exploring and experimenting with different GroupBy operations to unlock the full potential of PySpark!

GroupBy objects are returned by groupby calls: DataFrame. Return a copy of a DataFrame excluding elements from groups that do not satisfy the boolean criterion specified by func. Synonym for DataFrame. SparkSession pyspark. Catalog pyspark. DataFrame pyspark.

Spark groupByKey and reduceByKey are transformation operations on key-value RDDs, but they differ in how they combine the values corresponding to each key. It returns a new RDD where each key is associated with a sequence of its corresponding values. It returns a new RDD where each key is associated with an iterable collection of its corresponding values. In the above code, rdd1 is an RDD of key-value pairs. The groupByKey transformation is applied on rdd1 which returns a new RDD rdd2 where each key is associated with a sequence of its corresponding values. To retrieve the values corresponding to a particular key, you can use the lookup method as follows:. It returns a new RDD where each key is associated with a single reduced value. In the above code, func is a function that takes two values of type V i. The function func should be commutative and associative, as it will be applied in a parallel and distributed manner across the values corresponding to each key.

Pyspark groupby

Related: How to group and aggregate data using Spark and Scala. Similarly, we can also run groupBy and aggregate on two or more DataFrame columns, below example does group by on department , state and does sum on salary and bonus columns. Similarly, we can run group by and aggregate on two or more columns for other aggregate functions, please refer to the below example. Using agg aggregate function we can calculate many aggregations at a time on a single statement using SQL functions sum , avg , min , max mean e. In order to use these, we should import "from pyspark. This example does group on department column and calculates sum and avg of salary for each department and calculates sum and max of bonus for each department.

Dani coops

How to formulate machine learning problem 2. Statistics Cheat Sheet. Importing necessary libraries and creating a sample DataFrame import findspark findspark. E-mail Back to log-in. Change Language. What kind of Experience do you want to share? I am learning pyspark in databricks and though there were a few syntax changes, the tutorial made me understand the concept properly. Work Experiences. By default, Spark will optimize your DataFrame operations to improve performance. Improve Improve. We use cookies to ensure you have the best browsing experience on our website. Similar Reads. StreamingQueryManager pyspark. For instance, to find the average salary in each department:. Foundations of Machine Learning 2.

Groups the DataFrame using the specified columns, so we can run aggregation on them. See GroupedData for all the available aggregate functions.

Getting Started 1. Spacy for NLP Get paid for your published articles and stand a chance to win tablet, smartwatch and exclusive GfG goodies! Suggest Changes. Linear regression and regularisation Window functions are powerful tools for performing calculations across rows in a group of rows. Introduction to Linear Algebra SparkConf pyspark. You will be notified via email once the article is available for improvement. Let's start by exploring the basic syntax of the groupBy operation in PySpark: from pyspark. April 17, Jagdeesh.

0 thoughts on “Pyspark groupby”