Pytorch loss functions

Develop, fine-tune, and deploy AI models of any size and complexity. Loss functions are fundamental in ML model training, and, in most machine pytorch loss functions projects, there is no way to drive your model into making correct predictions without a loss function. In layman terms, pytorch loss functions, a loss function is a mathematical function or expression used to measure how well a model is doing on some dataset.

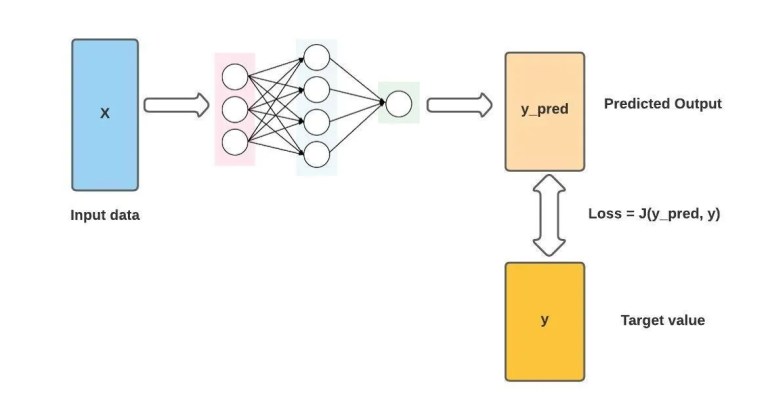

Similarly, deep learning training uses a feedback mechanism called loss functions to evaluate mistakes and improve learning trajectories. In this article, we will go in-depth about the loss functions and their implementation in the PyTorch framework. Don't start empty-handed. Loss functions measure how close a predicted value is to the actual value. When our model makes predictions that are very close to the actual values on our training and testing dataset, it means we have a pretty robust model. Loss functions guide the model training process towards correct predictions. The loss function is a mathematical function or expression used to measure a dataset's performance on a model.

Pytorch loss functions

Loss functions are a crucial component in neural network training, as every machine learning model requires optimization, which helps in reducing the loss and making correct predictions. But what exactly are loss functions, and how do you use them? This is where our loss function is needed. The loss functio n is an expression used to measure how close the predicted value is to the actual value. This expression outputs a value called loss, which tells us the performance of our model. By reducing this loss value in further training, the model can be optimized to output values that are closer to the actual values. Pytorch is a popular open-source Python library for building deep learning models effectively. It provides us with a ton of loss functions that can be used for different problems. There are basically three types of loss functions in probability: classification, regression, and ranking loss functions. Regression losses are mostly for problems that deal with continuous values, such as predicting age or prices. On the other hand, classification losses are for problems that deal with discrete values, such as detecting whether an email is spam or ham.

Join today and get hours of free compute every month.

As a data scientist or software engineer, you might have come across situations where the standard loss functions available in PyTorch are not enough to capture the nuances of your problem statement. In this blog post, we will be discussing how to create custom loss functions in PyTorch and integrate them into your neural network model. A loss function, also known as a cost function or objective function, is used to quantify the difference between the predicted and actual output of a machine learning model. The goal of training a machine learning model is to minimize the value of the loss function, which indicates that the model is making accurate predictions. PyTorch offers a wide range of loss functions for different problem statements, such as Mean Squared Error MSE for regression problems and Cross-Entropy Loss for classification problems. However, there are situations where these standard loss functions are not suitable for your problem statement. A custom loss function in PyTorch is a user-defined function that measures the difference between the predicted output of the neural network and the actual output.

Similarly, deep learning training uses a feedback mechanism called loss functions to evaluate mistakes and improve learning trajectories. In this article, we will go in-depth about the loss functions and their implementation in the PyTorch framework. Don't start empty-handed. Loss functions measure how close a predicted value is to the actual value. When our model makes predictions that are very close to the actual values on our training and testing dataset, it means we have a pretty robust model.

Pytorch loss functions

Develop, fine-tune, and deploy AI models of any size and complexity. Loss functions are fundamental in ML model training, and, in most machine learning projects, there is no way to drive your model into making correct predictions without a loss function. In layman terms, a loss function is a mathematical function or expression used to measure how well a model is doing on some dataset. Knowing how well a model is doing on a particular dataset gives the developer insights into making a lot of decisions during training such as using a new, more powerful model or even changing the loss function itself to a different type. Speaking of types of loss functions, there are several of these loss functions which have been developed over the years, each suited to be used for a particular training task. In this article, we are going to explore these different loss functions which are part of the PyTorch nn module. We will further take a deep dive into how PyTorch exposes these loss functions to users as part of its nn module API by building a custom one. We stated earlier that loss functions tell us how well a model does on a particular dataset. Technically, how it does this is by measuring how close a predicted value is close to the actual value. When our model is making predictions that are very close to the actual values on both our training and testing dataset, it means we have a quite robust model.

Pill identifier for dogs

The cosine distance correlates to the angle between the two points which means that the smaller the angle, the closer the inputs and hence the more similar they are. KL Divergence only assesses how the probability distribution prediction is different from the distribution of ground truth. ZeroPad2d Pads the input tensor boundaries with zero. It uses a squared term if the absolute error falls below one and an absolute term otherwise. The logarithm does the penalizing part here. The technical storage or access that is used exclusively for statistical purposes. Compute sums or means of 'bags' of embeddings, without instantiating the intermediate embeddings. With custom loss functions, you have complete control over the loss calculation process. Activation Functions in Pytorch. Sigmoid Applies the element-wise function: nn. The second part is a normalization value and is used to ensure that the output of the softmax layer is always a probability value. Computes a partial inverse of MaxPool2d. Cross Entropy Loss function is used in classification problems, this loss function computes the differences between two probability distributions for a given set of random variables. This article is being improved by another user right now. Machine Learning.

Loss functions are a crucial component in neural network training, as every machine learning model requires optimization, which helps in reducing the loss and making correct predictions. But what exactly are loss functions, and how do you use them? This is where our loss function is needed.

Professional Services. It's used when there is an input tensor and a label tensor containing values of 1 or However for very large loss values the gradient explodes, hence the criterion switching to a Mean Absolute Error, whose gradient is almost constant for every loss value, when the absolute difference becomes larger than beta and the potential gradient explosion is eliminated. NLL uses a negative connotation since the probabilities or likelihoods vary between zero and one, and the logarithms of values in this range are negative. Where x is the input, y is the target, w is the weight, C is the number of classes, and N spans the mini-batch dimension. Another option is to install the PyTorch framework on a local machine using an anaconda package installer. Join today and get hours of free compute every month. By implementing custom loss functions, you can enhance your machine learning models and achieve better results. So it makes the loss value to be positive. From the torch. LazyConv2d A torch. This punishes the model for making big mistakes and encourages small mistakes. Different loss functions suit different problems, each carefully crafted by researchers to ensure stable gradient flow during training.

0 thoughts on “Pytorch loss functions”