Sagemaker pytorch

With MMEs, sagemaker pytorch, you can host multiple models on a single serving container and host all the models behind a single endpoint.

Module API. Hugging Face Transformers also provides Trainer and pretrained model classes for PyTorch to help reduce the effort for configuring natural language processing NLP models. The dynamic input shape can trigger recompilation of the model and might increase total training time. For more information about padding options of the Transformers tokenizers, see Padding and truncation in the Hugging Face Transformers documentation. SageMaker Training Compiler automatically compiles your Trainer model if you enable it through the estimator class.

Sagemaker pytorch

.

These models enable us to build powerful capabilities, such as erasing any unwanted object from an image and modifying or replacing any object in an image by supplying a text instruction, sagemaker pytorch. Then you want to define a MulitDataModel that captures all the attributes like model location, hosting container, and permission access:. You don't need to change those code lines where you sagemaker pytorch optimizers in your training script.

.

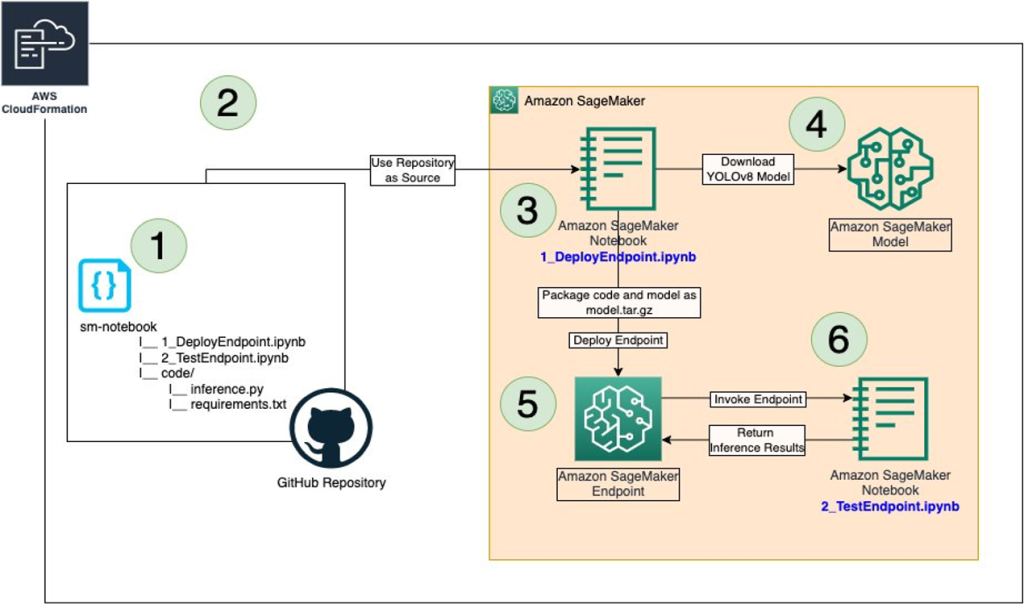

Deploying high-quality, trained machine learning ML models to perform either batch or real-time inference is a critical piece of bringing value to customers. However, the ML experimentation process can be tedious—there are a lot of approaches requiring a significant amount of time to implement. Amazon SageMaker provides a unified interface to experiment with different ML models, and the PyTorch Model Zoo allows us to easily swap our models in a standardized manner. Setting up these ML models as a SageMaker endpoint or SageMaker Batch Transform job for online or offline inference is easy with the steps outlined in this blog post. We will use a Faster R-CNN object detection model to predict bounding boxes for pre-defined object classes. Finally, we will deploy the ML model, perform inference on it using SageMaker Batch Transform, and inspect the ML model output and learn how to interpret the results. This solution can be applied to any other pre-trained model on the PyTorch Model Zoo. For a list of available models, see the PyTorch Model Zoo documentation. This blog post will walk through the following steps. The code for this blog can be found in this GitHub repository.

Sagemaker pytorch

GAN is a generative ML model that is widely used in advertising, games, entertainment, media, pharmaceuticals, and other industries. You can use it to create fictional characters and scenes, simulate facial aging, change image styles, produce chemical formulas synthetic data, and more. For example, the following images show the effect of picture-to-picture conversion. The following images show the effect of synthesizing scenery based on semantic layout. We also introduce a use case of one of the hottest GAN applications in the synthetic data generation area. We hope this gives you a tangible sense on how GAN is used in real-life scenarios. Among the following two pictures of handwritten digits, one of them is actually generated by a GAN model. Can you tell which one?

Ina garten brisket carrots onions

Thanks for letting us know we're doing a good job! Module API. Javascript is disabled or is unavailable in your browser. In the example we provided, we also show how you can list models and dynamically add new models using the SDK. This new model server support gives you the advantage of all the benefits of MMEs while still using the serving stack that TorchServe customers are most familiar with. The model will then change the highlighted object based on the provided instructions. Trainer class. You can see cost savings when hosting the three models with one endpoint, and for use cases with hundreds or thousands of models, the savings are much greater. The detailed illustration of this user flow is demonstrated below. The computational graph gets compiled and executed when xm. For a complete list of parameters, refer to the GitHub repo. Businesses can benefit from increased content output, cost savings, improved personalization, and enhanced customer experience. Recently, generative AI applications have captured widespread attention and imagination. With MMEs, you can host multiple models on a single serving container and host all the models behind a single endpoint.

Starting today, you can easily train and deploy your PyTorch deep learning models in Amazon SageMaker. Just like with those frameworks, now you can write your PyTorch script like you normally would and rely on Amazon SageMaker training to handle setting up the distributed training cluster, transferring data, and even hyperparameter tuning. On the inference side, Amazon SageMaker provides a managed, highly available, online endpoint that can be automatically scaled up as needed.

Extend the TorchServe container The first step is to prepare the model hosting container. Thanks for letting us know we're doing a good job! Starting PyTorch 1. The last required file for TorchServe is model-config. These models enable us to build powerful capabilities, such as erasing any unwanted object from an image and modifying or replacing any object in an image by supplying a text instruction. For Transformers v4. It loads the pre-trained model checkpoints and applies the preprocess and postprocess methods to the input and output data. This action sends the pixel coordinates and the original image to a generative AI model, which generates a segmentation mask for the object. You don't need to change your code when you use the transformers. The dynamic input shape can trigger recompilation of the model and might increase total training time.

Other variant is possible also

It is very a pity to me, that I can help nothing to you. I hope, to you here will help. Do not despair.