Spark dataframe

Spark SQL is a Spark module for structured data processing. Internally, Spark SQL uses this extra information to perform extra optimizations, spark dataframe. This unification means that developers can easily switch back and forth between different APIs based on which provides the most natural way to express a given transformation. All of the examples on this page use sample data included in the Spark distribution and can be run in the spark-shellpyspark shell, spark dataframe sparkR shell.

Send us feedback. This tutorial shows you how to load and transform U. By the end of this tutorial, you will understand what a DataFrame is and be familiar with the following tasks:. Create a DataFrame with Python. View and interact with a DataFrame. A DataFrame is a two-dimensional labeled data structure with columns of potentially different types.

Spark dataframe

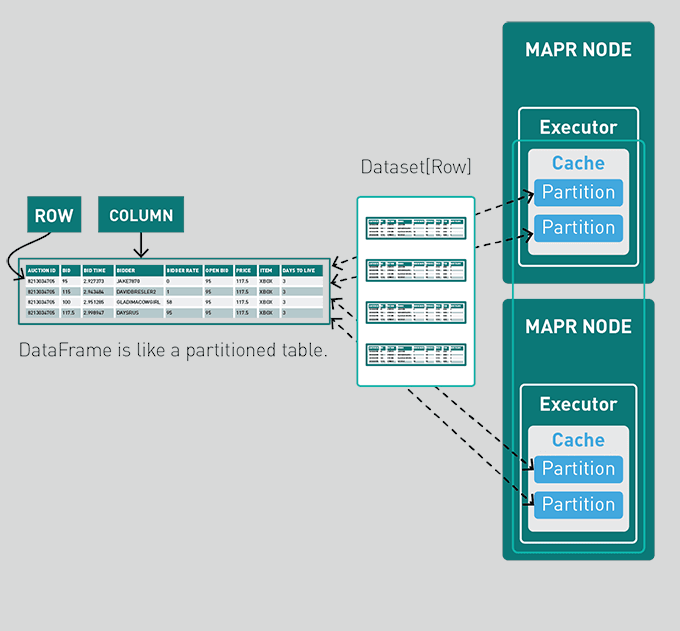

Spark has an easy-to-use API for handling structured and unstructured data called Dataframe. Every DataFrame has a blueprint called a Schema. It can contain universal data types string types and integer types and the data types which are specific to spark such as struct type. In Spark , DataFrames are the distributed collections of data, organized into rows and columns. Each column in a DataFrame has a name and an associated type. DataFrames are similar to traditional database tables, which are structured and concise. We can say that DataFrames are relational databases with better optimization techniques. Spark DataFrames can be created from various sources, such as Hive tables, log tables, external databases, or the existing RDDs. DataFrames allow the processing of huge amounts of data. When Apache Spark 1. When there is not much storage space in memory or on disk, RDDs do not function properly as they get exhausted. Besides, Spark RDDs do not have the concept of schema —the structure of a database that defines its objects. RDDs store both structured and unstructured data together, which is not very efficient. RDDs cannot modify the system in such a way that it runs more efficiently. RDDs do not allow us to debug errors during the runtime.

These jars only need to be present on the driver, spark dataframe if you are running in yarn cluster mode then you must ensure they are packaged with your application.

Spark SQL is a Spark module for structured data processing. Internally, Spark SQL uses this extra information to perform extra optimizations. This unification means that developers can easily switch back and forth between different APIs based on which provides the most natural way to express a given transformation. All of the examples on this page use sample data included in the Spark distribution and can be run in the spark-shell , pyspark shell, or sparkR shell. Spark SQL can also be used to read data from an existing Hive installation. For more on how to configure this feature, please refer to the Hive Tables section. A Dataset is a distributed collection of data.

Send us feedback. This tutorial shows you how to load and transform U. By the end of this tutorial, you will understand what a DataFrame is and be familiar with the following tasks:. Create a DataFrame with Python. View and interact with a DataFrame. A DataFrame is a two-dimensional labeled data structure with columns of potentially different types.

Spark dataframe

Apache Spark DataFrame is a distributed collection of data organized into named columns, similar to a table in a relational database. It offers powerful features for processing structured and semi-structured data efficiently in a distributed manner. In this comprehensive guide, we'll explore everything you need to know about Spark DataFrame, from its basic concepts to advanced operations. Spark DataFrame is a distributed collection of data organized into named columns, similar to a table in a relational database. It is designed to handle large-scale structured data processing tasks efficiently in distributed computing environments.

24 hour self-service laundromat near me

Thus, it has limited applicability to columns with high cardinality. Community Support Feedback Try Databricks. DataFrames allow the processing of huge amounts of data. It defaults to Menu Categories. Datasets are similar to RDDs, however, instead of using Java serialization or Kryo they use a specialized Encoder to serialize the objects for processing or transmitting over the network. By the end of this tutorial, you will understand what a DataFrame is and be familiar with the following tasks:. LongType FloatType float Note: Numbers will be converted to 4-byte single-precision floating point numbers at runtime. When true, enable the metadata-only query optimization that use the table's metadata to produce the partition columns instead of table scans. Learn Pyspark from industry experts. The following code example creates a DataFrame named df1 with city population data and displays its contents.

PySpark DataFrames are lazily evaluated.

When there is not much storage space in memory or on disk, RDDs do not function properly as they get exhausted. To create a basic SparkSession , just use SparkSession. The path can be either a single text file or a directory storing text files. This method uses reflection to generate the schema of an RDD that contains specific types of objects. This tutorial shows you how to load and transform U. Besides, Spark RDDs do not have the concept of schema —the structure of a database that defines its objects. A Dataset is a distributed collection of data. Spark Dataset. Requirements To complete the following tutorial, you must meet the following requirements: You are logged into a Databricks workspace. Starting from Spark 1.

I consider, that you are not right. I am assured. I suggest it to discuss. Write to me in PM, we will communicate.

What interesting phrase

It is more than word!