Stable diffusion huggingface

Our library is designed stable diffusion huggingface a focus on usability over performancesimple over easyand customizability over abstractions. For more details about installing PyTorch and Flaxplease refer to their official documentation. You can also dig into the models and schedulers toolbox to build your own diffusion system:. Check out the Quickstart to launch your diffusion journey today!

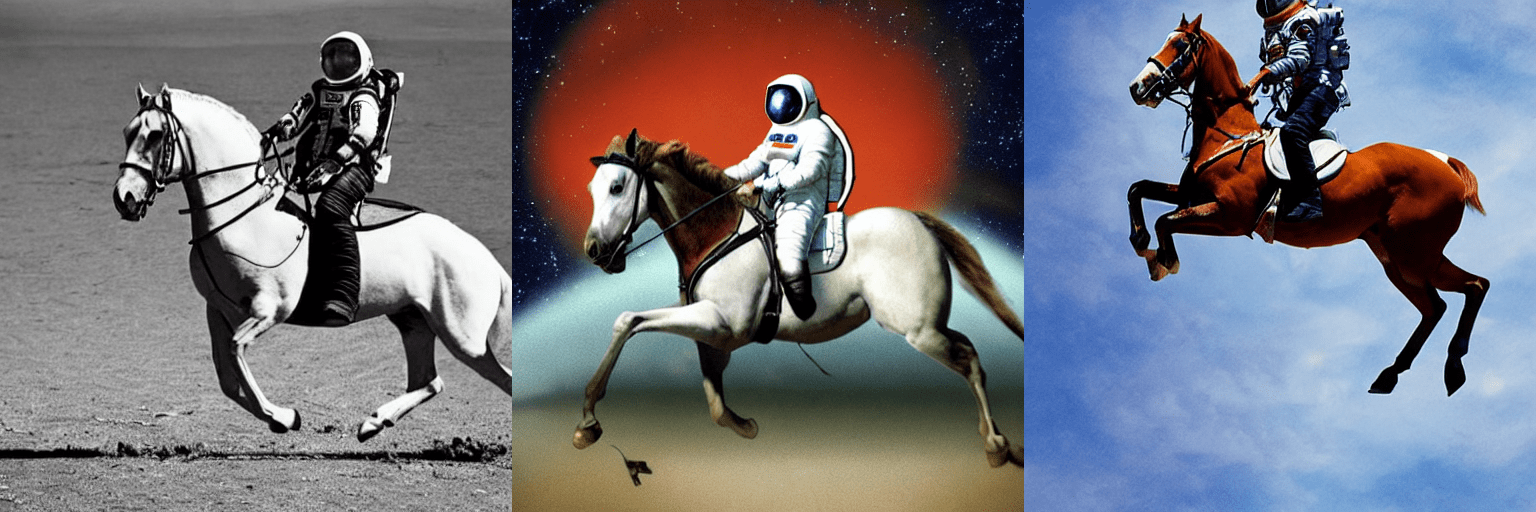

Stable Diffusion is a latent text-to-image diffusion model capable of generating photo-realistic images given any text input. If you are looking for the weights to be loaded into the CompVis Stable Diffusion codebase, come here. Model Description: This is a model that can be used to generate and modify images based on text prompts. Resources for more information: GitHub Repository , Paper. You can do so by telling diffusers to expect the weights to be in float16 precision:.

Stable diffusion huggingface

This model card focuses on the model associated with the Stable Diffusion v2 model, available here. This stable-diffusion-2 model is resumed from stable-diffusionbase base-ema. Resumed for another k steps on x images. Model Description: This is a model that can be used to generate and modify images based on text prompts. Resources for more information: GitHub Repository. Running the pipeline if you don't swap the scheduler it will run with the default DDIM, in this example we are swapping it to EulerDiscreteScheduler :. The model should not be used to intentionally create or disseminate images that create hostile or alienating environments for people. This includes generating images that people would foreseeably find disturbing, distressing, or offensive; or content that propagates historical or current stereotypes. The model was not trained to be factual or true representations of people or events, and therefore using the model to generate such content is out-of-scope for the abilities of this model. Using the model to generate content that is cruel to individuals is a misuse of this model.

The intended use of this model is with the Safety Checker in Diffusers.

Stable Diffusion is a latent text-to-image diffusion model capable of generating photo-realistic images given any text input. This model card gives an overview of all available model checkpoints. For more in-detail model cards, please have a look at the model repositories listed under Model Access. For the first version 4 model checkpoints are released. Higher versions have been trained for longer and are thus usually better in terms of image generation quality then lower versions. More specifically:.

This repository contains Stable Diffusion models trained from scratch and will be continuously updated with new checkpoints. The following list provides an overview of all currently available models. More coming soon. Instructions are available here. New stable diffusion model Stable Diffusion 2. Same number of parameters in the U-Net as 1. The above model is finetuned from SD 2. Added a x4 upscaling latent text-guided diffusion model. New depth-guided stable diffusion model , finetuned from SD 2.

Stable diffusion huggingface

For more information, you can check out the official blog post. Since its public release the community has done an incredible job at working together to make the stable diffusion checkpoints faster , more memory efficient , and more performant. This notebook walks you through the improvements one-by-one so you can best leverage StableDiffusionPipeline for inference. So to begin with, it is most important to speed up stable diffusion as much as possible to generate as many pictures as possible in a given amount of time. We aim at generating a beautiful photograph of an old warrior chief and will later try to find the best prompt to generate such a photograph. See the documentation on reproducibility here for more information. The default run we did above used full float32 precision and ran the default number of inference steps

Fortnite season 3 release date

Use it with the stablediffusion repository: download the v-ema. Go to file. This model card focuses on the model associated with the Stable Diffusion v2 model, available here. Optimized model types. For more information, you can check out the official blog post. Stable Diffusion is a latent text-to-image diffusion model capable of generating photo-realistic images given any text input. The concepts are passed into the model with the generated image and compared to a hand-engineered weight for each NSFW concept. To help you get the most out of the Stable Diffusion pipelines, here are a few tips for improving performance and usability. Training Training Data The model developers used the following dataset for training the model: LAION-2B en and subsets thereof see next section Training Procedure Stable Diffusion v is a latent diffusion model which combines an autoencoder with a diffusion model that is trained in the latent space of the autoencoder. Training Procedure Stable Diffusion v2 is a latent diffusion model which combines an autoencoder with a diffusion model that is trained in the latent space of the autoencoder. As a result, we observe some degree of memorization for images that are duplicated in the training data. Sexual content without consent of the people who might see it. Optimized hardware. Taking Diffusers Beyond Images. Using the model to generate content that is cruel to individuals is a misuse of this model.

This model card focuses on the model associated with the Stable Diffusion v2 model, available here. This stable-diffusion-2 model is resumed from stable-diffusionbase base-ema.

Textual inversion Distributed inference with multiple GPUs Improve image quality with deterministic generation Control image brightness Prompt weighting Improve generation quality with FreeU. Training Procedure Stable Diffusion v is a latent diffusion model which combines an autoencoder with a diffusion model that is trained in the latent space of the autoencoder. This stable-diffusion-2 model is resumed from stable-diffusionbase base-ema. Memory and Speed Torch2. Follows the mask-generation strategy presented in LAMA which, in combination with the latent VAE representations of the masked image, are used as an additional conditioning. If you are looking for the weights to be loaded into the CompVis Stable Diffusion codebase, come here. You signed in with another tab or window. During training, Images are encoded through an encoder, which turns images into latent representations. During training, Images are encoded through an encoder, which turns images into latent representations. Sexual content without consent of the people who might see it. For more information, we recommend taking a look at the official documentation here. Bias While the capabilities of image generation models are impressive, they can also reinforce or exacerbate social biases. Get started.

0 thoughts on “Stable diffusion huggingface”