Tacotron 2 github

Yet another PyTorch implementation of Tacotron 2 with reduction factor and faster training speed. This implementation supports both single- multi-speaker TTS and several techniques to enforce the robustness and efficiency of the model. For custom Twitch TTS. Add a description, image, and links to the tacotron2 topic page so that developers can more easily tacotron 2 github about it, tacotron 2 github.

This implementation supports both single-, multi-speaker TTS and several techniques to enforce the robustness and efficiency of the model. Unlike many previous implementations, this is kind of a Comprehensive Tacotron2 where the model supports both single-, multi-speaker TTS and several techniques such as reduction factor to enforce the robustness of the decoder alignment. The model can learn alignment only in 5k. Note that only 1 batch size is supported currently due to the autoregressive model architecture. Skip to content. You signed in with another tab or window. Reload to refresh your session.

Tacotron 2 github

Tacotron 2 - PyTorch implementation with faster-than-realtime inference. This implementation includes distributed and automatic mixed precision support and uses the LJSpeech dataset. Visit our website for audio samples using our published Tacotron 2 and WaveGlow models. Training using a pre-trained model can lead to faster convergence By default, the dataset dependent text embedding layers are ignored. When performing Mel-Spectrogram to Audio synthesis, make sure Tacotron 2 and the Mel decoder were trained on the same mel-spectrogram representation. This implementation uses code from the following repos: Keith Ito , Prem Seetharaman as described in our code. Skip to content. You signed in with another tab or window. Reload to refresh your session. You signed out in another tab or window. You switched accounts on another tab or window. Dismiss alert.

Updated Oct 28, Python. Report repository.

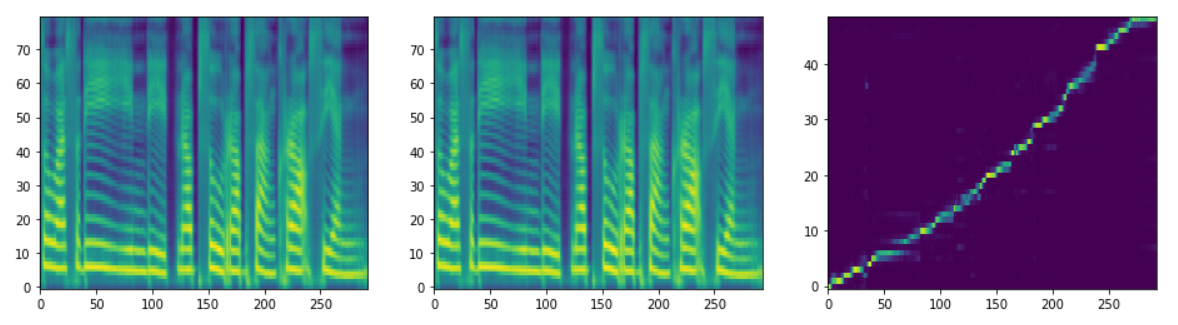

Yet another PyTorch implementation of Tacotron 2 with reduction factor and faster training speed. The project is highly based on these. I made some modification to improve speed and performance of both training and inference. Currently only support LJ Speech. You can modify hparams. You can find alinment images and synthesized audio clips during training.

Tacotron 2 - PyTorch implementation with faster-than-realtime inference. This implementation includes distributed and automatic mixed precision support and uses the LJSpeech dataset. Visit our website for audio samples using our published Tacotron 2 and WaveGlow models. Training using a pre-trained model can lead to faster convergence By default, the dataset dependent text embedding layers are ignored. When performing Mel-Spectrogram to Audio synthesis, make sure Tacotron 2 and the Mel decoder were trained on the same mel-spectrogram representation. This implementation uses code from the following repos: Keith Ito , Prem Seetharaman as described in our code. Skip to content. You signed in with another tab or window.

Tacotron 2 github

Yet another PyTorch implementation of Tacotron 2 with reduction factor and faster training speed. The project is highly based on these. I made some modification to improve speed and performance of both training and inference. Currently only support LJ Speech.

Www.billboard.com vote 2023

You signed out in another tab or window. You signed in with another tab or window. Latest commit History 69 Commits. The previous tree shows the current state of the repository separate training, one step at a time. Tacotron 2 - PyTorch implementation with faster-than-realtime inference. This implementation includes distributed and automatic mixed precision support and uses the LJSpeech dataset. Contributors 5. You switched accounts on another tab or window. Add this topic to your repo To associate your repository with the tacotron2 topic, visit your repo's landing page and select "manage topics. Updated Jul 31, Python. Latest commit. Updated Feb 1, Python. To synthesize audio in an End-to-End text to audio manner both models at work :. The quality isn't as good as Google's demo yet, but hopefully it will get there someday MIT license.

While browsing the Internet, I have noticed a large number of people claiming that Tacotron-2 is not reproducible, or that it is not robust enough to work on other datasets than the Google internal speech corpus. Although some open-source works 1 , 2 has proven to give good results with the original Tacotron or even with Wavenet , it still seemed a little harder to reproduce the Tacotron 2 results with high fidelity to the descriptions of Tacotron-2 T2 paper. In this complementary documentation, I will mostly try to cover some ambiguities where understandings might differ and proving in the process that T2 actually works with open source speech corpus like Ljspeech dataset.

Updated Jan 23, Python. You signed out in another tab or window. To have an overview of our advance on this project, please refer to this discussion. Currently only support LJ Speech. This can greatly reduce the amount of data required to train a model. Notifications Fork 2 Star 3. Alternatively, one can build the docker image to ensure everything is setup automatically and use the project inside the docker containers. Releases No releases published. Report repository. Alternately, you can run eval. If you are an Anaconda user: else replace pip with pip3 and python with python3. Dismiss alert. Update : a recent fix to gradient clipping by candlewill may have fixed this. Updated Nov 27, Python.

You have hit the mark. Thought good, I support.