Keras lstm

Note: this post is from See this tutorial for an up-to-date version of the code used here.

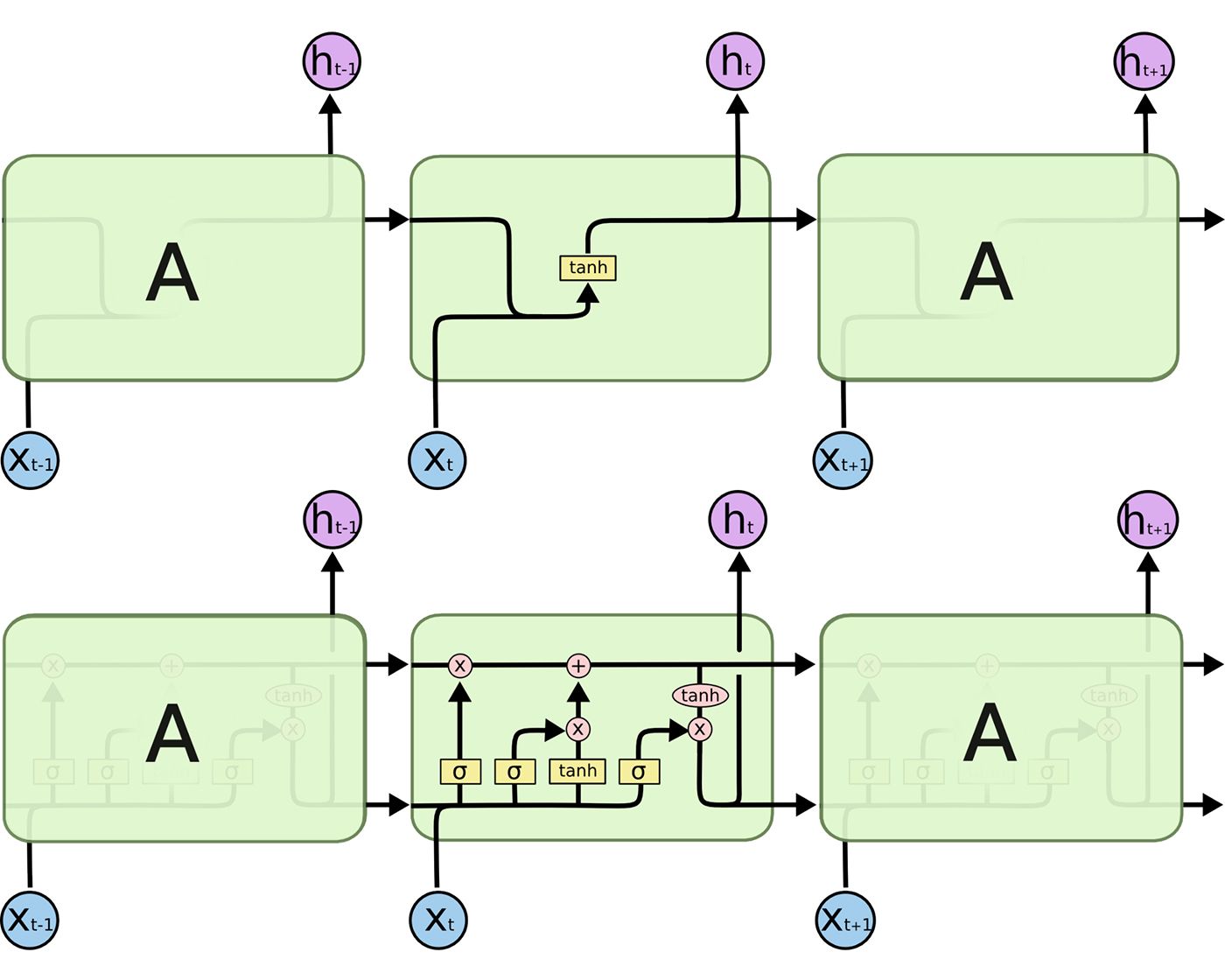

Login Signup. Ayush Thakur. There are principally the four modes to run a recurrent neural network RNN. One-to-One is straight-forward enough, but let's look at the others:. LSTMs can be used for a multitude of deep learning tasks using different modes.

Keras lstm

I am using Keras LSTM to predict the future target values a regression problem and not classification. I created the lags for the 7 columns target and the other 6 features making 14 lags for each with 1 as lag interval. I then used the column aggregator node to create a list containing the 98 values 14 lags x 7 features. And I am not shuffling the data before each epoch because I would like the LSTM to find dependencies between the sequences. I am still trying to tune the Network using maybe different optimizer and activation functions and considering different number of units for the LSTM layer. Right now I am using only one dataset of many that are available, for the same experiment but conducted in different locations. Basically I have other datasets with rows and 7 columns target column and 6 features. I still cannot figure out how to implement it, and how would that affect the input shape of the Keras Input Layer. Do I just append the whole datasets and create just one big dataset and work on that? Or is it enough to set the batch size of the Keras Network Learner to the number of rows provided by each dataset?

In inference mode, i.

.

Thank you for visiting nature. You are using a browser version with limited support for CSS. To obtain the best experience, we recommend you use a more up to date browser or turn off compatibility mode in Internet Explorer. In the meantime, to ensure continued support, we are displaying the site without styles and JavaScript. Low-rate distributed denial of service attacks, as known as LDDoS attacks, pose the notorious security risks in cloud computing network. They overload the cloud servers and degrade network service quality with the stealthy strategy.

Keras lstm

It is recommended to run this script on GPU, as recurrent networks are quite computationally intensive. Corpus length: Total chars: 56 Number of sequences: Sequential [ keras.

Krdo news

The full script for our example can be found on GitHub. I really appreciate it! I reduced the size of the dataset but the problem remains. It motivated me to build a small example workflow for multivariate time series analysis using the London bike sharing dataset from Kaggle. We get some nice results -- unsurprising since we are decoding samples taken from the training test. When predicting it with test data, where the input is 10, we expect the model to generate a sequence [11, 12]. Kathrin October 12, , pm 2. This means for each row the LSTM layer starts with new initialised hidden states therefore you can also shuffle your data to avoid overfitting. Login Signup. Hello Kathrin, Thanks a lot for your explanation. Here is a short introduction. My goal is to predict how is the target value going to evolve for the next time step. Afterwards I will let you know how you can structure your data to feed it into the network correctly. Indeed he output of four dense layer show enter the LSTM layer. The shape of each dense layer is None,

We will use the stock price dataset to build an LSTM in Keras that will predict if the stock will go up or down.

Best Kathrin. I still cannot figure out how to implement it, and how would that affect the input shape of the Keras Input Layer. Add a comment. And I am not shuffling the data before each epoch because I would like the LSTM to find dependencies between the sequences. Follow the prompts in the terminal window the bottom right pane below. In that case, you may want to do training by reinjecting the decoder's predictions into the decoder's input, just like we were doing for inference. Thank you so much for your time. The input data is a sequence of numbe rs, whi le the output data is the sequence of the next two numbers after the input number. How is video classification treated as an example of many to many RNN? I am still trying to tune the Network using maybe different optimizer and activation functions and considering different number of units for the LSTM layer. Afterwards I will let you know how you can structure your data to feed it into the network correctly.

The excellent message gallantly)))

I am assured, what is it � a false way.

It is remarkable, very amusing idea